A single second of hand-animated character motion can take an experienced animator hours. Entire productions are bottlenecked by it.

We're building AI that generates high-quality motion from just a few key poses — keeping the animator in creative control while compressing weeks of work into minutes. The underlying models are grounded in our own research on generative modelling, published at NeurIPS and ICLR.

Our approach

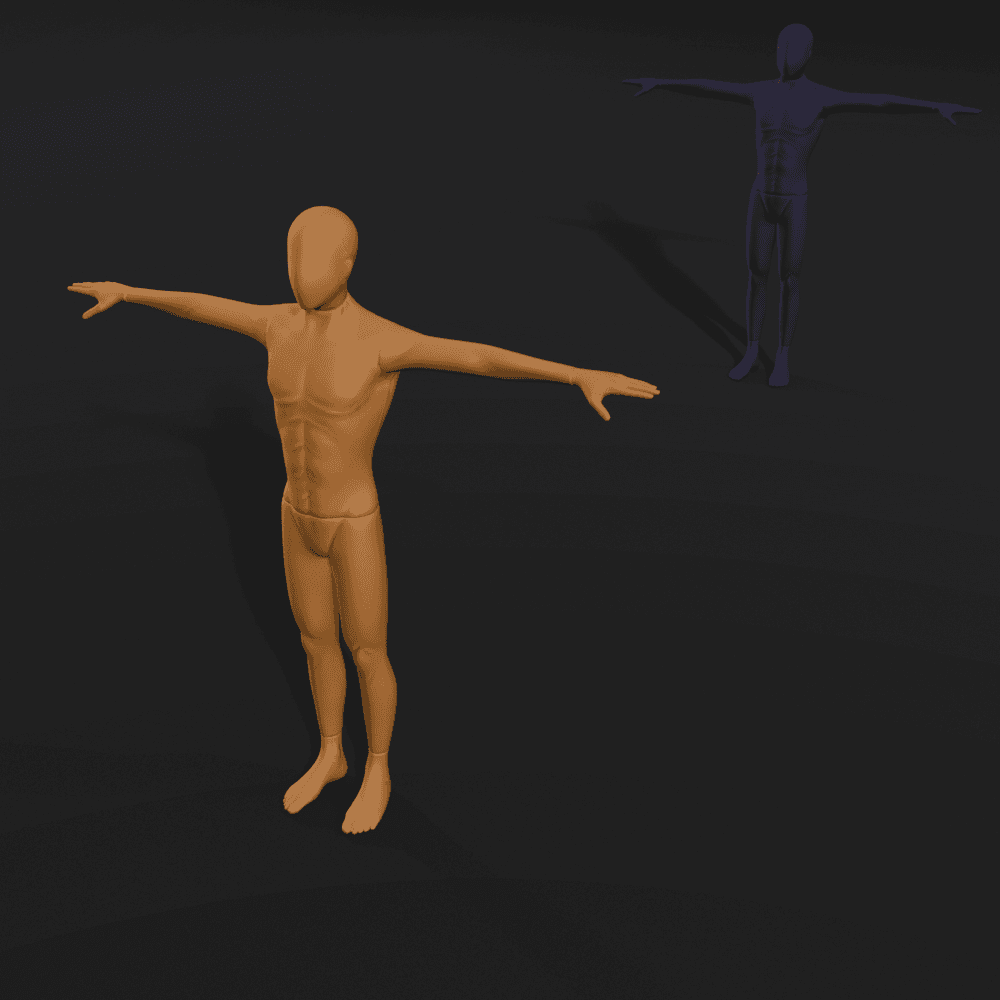

Direct

You set the keyframes.

The model respects them.

Place key poses at the moments that matter — story beats, timing, emotion. The AI treats every keyframe as a hard constraint and generates natural transitions between them. Your creative intent is preserved exactly.

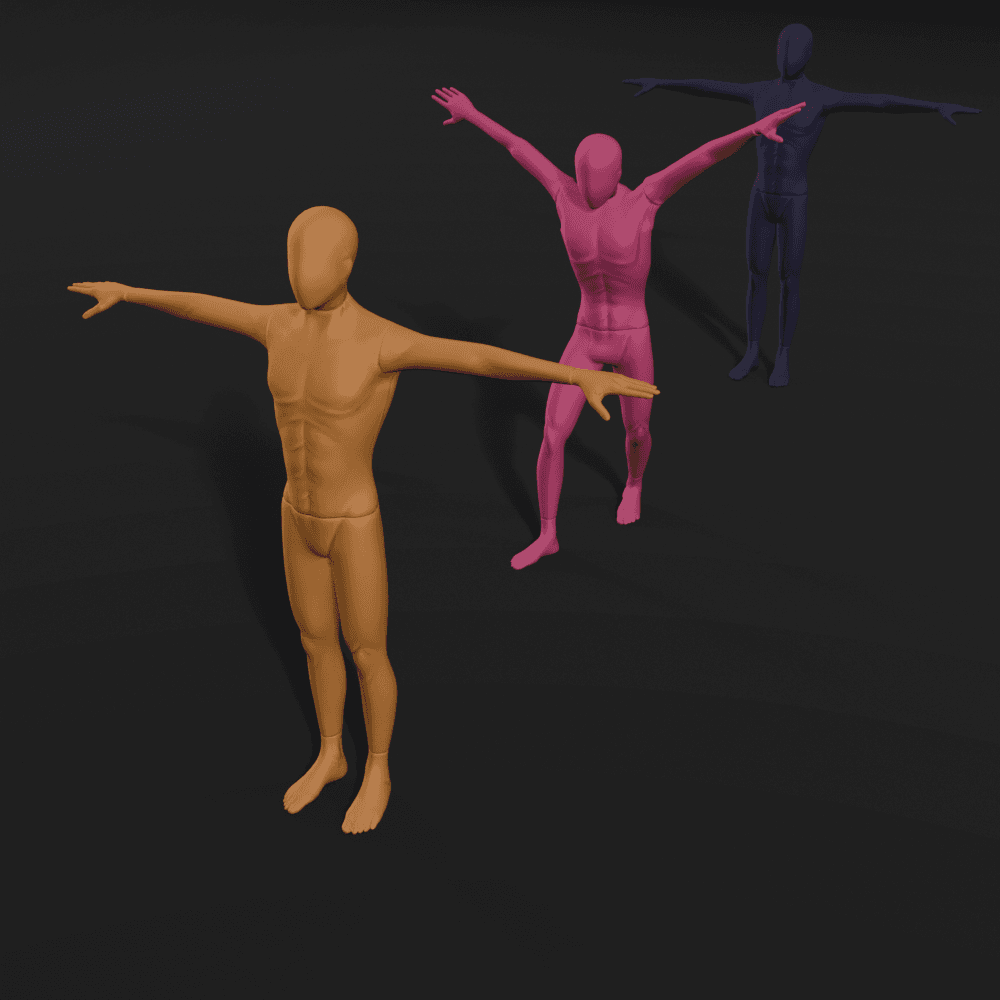

Generate

Same direction.

Infinite takes.

From the same set of keyframes, the model generates diverse, physically plausible motion — each variation stylistically coherent. Explore options instantly instead of animating each one by hand.

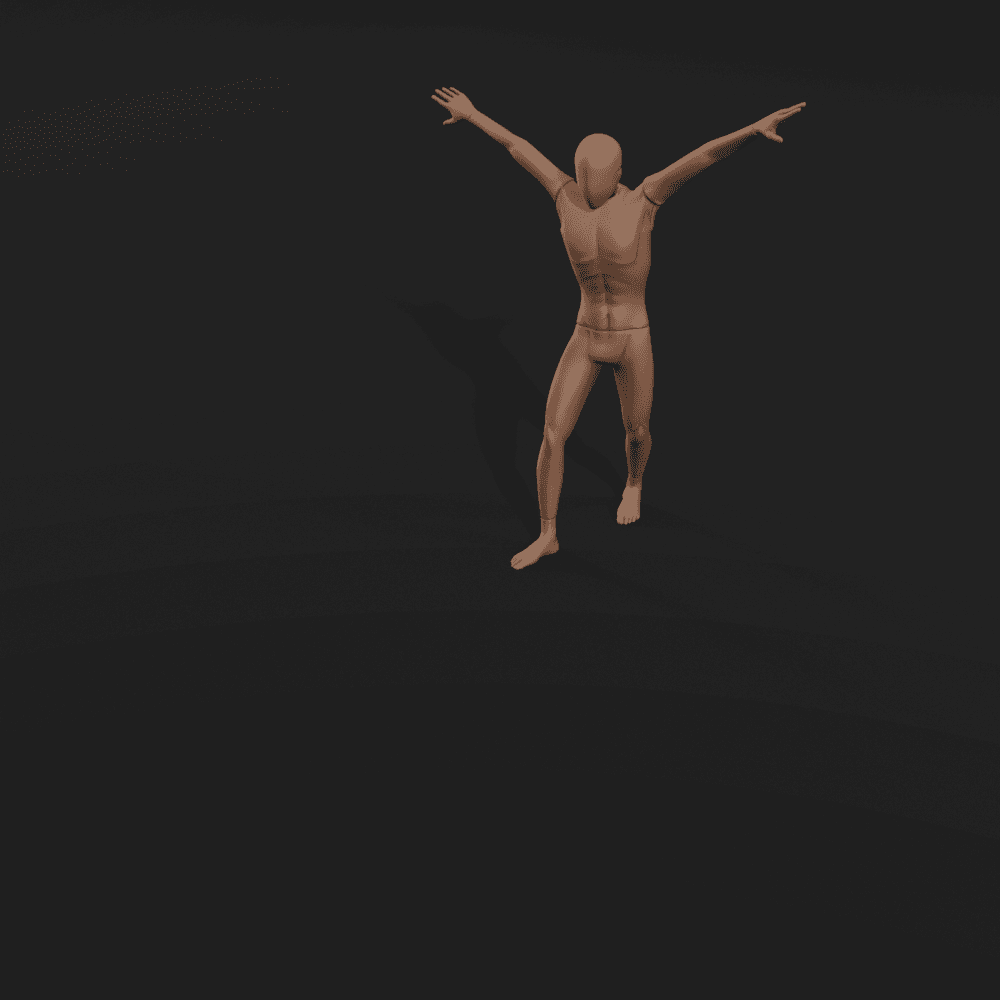

Refine

Adjust anything.

Regenerate the rest.

Move a pose, add a constraint, regenerate a segment. Every change propagates through the model while preserving what you've already approved. You stay in the loop at every frame.

What this changes

When realistic motion can be generated in seconds instead of hours, the entire production equation shifts.

Faster iteration

Explore motion ideas in seconds. Try ten variations before committing to one — without blocking the rest of the pipeline.

More output, same team

Generate high-quality motion at a pace that used to require much larger animation teams. Scale production without scaling headcount.

Creative control preserved

This isn't auto-generation. Every keyframe you set is respected. Every frame you adjust stays adjusted. The AI fills in — you sign off.

Works with your pipeline

Standard skeletal formats. Exports to your existing tools. Designed to integrate into professional workflows, not replace them.

Diverse, natural motion

The model produces physically plausible, stylistically varied results — not canned clips or blended mocap. Each generation is unique.

Research-driven

Built on our own generative modelling research, published at NeurIPS and ICLR. The science is ours — so is the product roadmap.

Team

Animatica was founded to bring together deep generative modelling research and decades of production animation experience.

Paweł Pierzchlewicz

CEO & AI Researcher

PhD in AI/ML. Publications at NeurIPS and ICLR on generative models and motion synthesis.

Mateusz Tokarz

CCO & VFX Supervisor

Emmy-nominated VFX Supervisor. 15+ years on Hollywood productions and AAA games.

See it in action.

We'll run a live demo with your characters, your rigs, your production constraints. No slide decks — just the system working on your data.

Request a demoWe're a small research team and we're hiring.

View open roles →